Foundations of Computer Architecture: Registers, Instruction Set Design, Memory Addressing Modes, and CISC/RISC Principles.

Computer architecture, like many other concepts in computer science, can be quite tough to comprehend. From learning about various low-level hardware components of the computer to understanding how they interact with other hardware and software components, if not handled carefully, you might end up with a messy crime scene board. Luckily for you, this article is going to ease you into the world of computer architecture by treating fundamentals such as Registers, Instruction Set Design, Memory Addressing Modes, and CISC/RISC Principles with very relatable analogies. So, if you're an aspiring hardware engineer, software developer, security analyst, or just a CS student looking to pass a class, this one's for you.

What is Computer Architecture?

Let's start by building our definition from the simple to the complex. Computer architecture involves designing plans for constructing computers. Imagine you are a choir master coordinating your choir for a performance. You are responsible for giving instructions not only to well known parts like bass, tenor, soprano and alto but also lesser known parts like baritone, countertenor and mezzo-soprano. You control how these parts interact with each other and react to different situations to produce music. Just like computer architecture.

At its core, computer architecture addresses the interconnection and interaction of hardware (like CPU and memory) and software components to perform computational tasks based on a specified range of instructions(Instruction Set) that they can execute. Computer architecture also encompasses the memory hierarchy, including registers, caches, main memory (RAM), and secondary storage (e.g., hard drives, solid-state drives), as well as the mechanisms for accessing and managing memory resources efficiently.

Registers

What's the first thing that comes to your mind when you see registers? If it's a cash register then you're in luck as we are basing our analogy off a cash register.

When a cash transaction is made in a store, the money received is initially kept in the cash register. It serves as a temporary, quick-access storage location for the money. That way, the cashier is able to quickly and efficiently perform operations like giving customers change.

In a similar vein, computer registers play a similar role by serving as small, high-speed storage locations within the central processing unit (CPU). They are used to hold data temporarily during program execution and facilitate the efficient execution of instructions.

Types of Registers

Registers are essential components of a CPU architecture, providing fast, temporary storage for data, instructions, and control information during program execution. They can be categorized into three main groups: data registers, address registers, and status registers. Each group serves specific purposes and plays a crucial role in computer architecture. Here's a breakdown of the main types of registers commonly found in CPUs:

Data Registers:

General-Purpose Registers (GPR): Used to store operands, intermediate results, and data manipulated by instructions. GPRs serve various computational and data manipulation purposes within the CPU.

Memory Data Register (MDR): Temporarily holds data read from or written to memory during load and store operations. Facilitates data transfer between the CPU and memory.

Address Registers:

Program Counter (PC): Holds the memory address of the next instruction to be fetched and executed. Crucial for controlling the flow of program execution and supporting branching and subroutine calls.

Memory Address Register (MAR): Holds the memory address of data to be read from or written to memory. Facilitates memory addressing and data transfer between the CPU and memory.

Status Registers:

Instruction Register (IR): Holds the currently fetched instruction from memory, including the opcode and operand(s). Used by the CPU to decode and execute instructions.

Flag Register: Holds status flags that indicate the outcome of arithmetic and logic operations, such as zero, carry, overflow, and sign flags. Used to track and control program execution based on the results of operations.

Each type of register plays a unique and vital role in CPU operation, facilitating instruction execution, memory access, data manipulation, and control flow within the computer architecture. Together, they form the core components of the CPU's register file and enable efficient computation and data processing in modern computer systems.

Importance of Registers

Registers, being small, high-speed storage locations within the CPU offer many advantages when it comes to computation speeds. Some of these advantages include;

Since registers are located within the CPU, they provide faster means for storage and retrieval of information than other storage media like RAM.

Registers reduce the time needed for the CPU to perform operations by allowing it store and retrieve operands here without the need for repeated fetching from memory.

Enhanced loop performance is achieved by storing loop variables in the register allowing the execute loop iterations quickly and improving algorithm efficiency.

Compilers utilize registers to optimize the conversion of High Level Language to Low Level Language.

Instruction Set Design

This might be the most straight forward part yet. Instruction set design is simply the process of defining the set of instructions that the computer can perform based on its CPU architecture.

Significance of ISD and its Impact on Performance

Imagine you have to paint a room and you are instructed to use a detail brush and an artist palette. That would take you ages compared to when you are instructed to use buckets of paints and a roller. The keyword here is instructed. These instructions dictate the courses of action you can take to achieve a specific goal. If done properly, they could make it very easy to accomplish tasks while efficiently utilizing the tools at your disposal. Instruction sets in the computer are very similar in that they;

Significantly influences program performance by optimizing instruction execution and reducing latency.

Maximize hardware utilization by fully exploiting processor capabilities and reducing idle time.

Lower memory bandwidth requirements by minimizing instruction and data accesses.

Impact energy efficiency by optimizing instructions for reduced power consumption during program execution.

Improve software development productivity by enabling easier optimization and efficient code writing.

Can promote software compatibility and portability across different hardware platforms.

Types of Instructions

There are 3 main types of instructions commonly found in computer architecture. These instructions are used to categorize a broad set of operations the computer performs and they are as follows;

Data Movement Instructions: Data movement instructions are used to transfer data between memory locations, registers, and other storage elements within the computer system. These instructions are essential for loading data into registers, storing data in memory, and moving data between different memory locations.

Arithmetic and Logic Instructions: Arithmetic and logic instructions are used to perform mathematical operations (arithmetic) and logical operations (logic) on data stored in registers or memory. These instructions enable the CPU to perform calculations, comparisons, and bitwise operations on numerical and logical data.

Control Flow Instructions: Control flow instructions are used to control the flow of program execution by altering the sequence of instructions executed by the CPU. These instructions include branching, jumping, looping, and subroutine call instructions that enable the CPU to make decisions and execute instructions conditionally.

Memory Addressing Modes

Memory addressing modes in computer architecture define the various methods by which a CPU can specify the location of data in memory for read or write operations. These addressing modes determine how operands are accessed and manipulated during instruction execution. You can consider the addressing modes as the spinner used in a game of twister to assign limbs to different colors of circles and the circles on the mat as different memory locations.

Different addressing modes offer flexibility and efficiency in accessing memory and are used based on the requirements of specific instructions and programming scenarios.

Types of Memory Addressing Modes

Immediate Addressing: In immediate addressing mode, the operand value is directly encoded within the instruction itself. The operand is specified as a constant value or data directly embedded within the instruction. Immediate addressing is commonly used for operations that involve constant values or immediate data.

Direct Addressing: Indirect addressing mode uses a memory address stored in a register or memory location to access the operand. The instruction contains a pointer or reference to the memory address where the actual operand is stored. Direct addressing is straightforward and efficient for accessing data stored at a specific memory location.

Indirect Addressing: Indirect addressing mode uses a memory address stored in a register or memory location to access the operand. The instruction contains a pointer or reference to the memory address where the actual operand is stored. Indirect addressing is useful for accessing data stored in memory through pointers or references.

Register Addressing: Register addressing mode specifies the operand as the content of a CPU register. The instruction contains the register identifier, and the operand value is retrieved directly from the specified register. Register addressing is fast and efficient for accessing frequently used data stored in registers.

Relative Addressing: Relative addressing mode calculates the operand address by adding a constant offset to the current program counter (PC) value. The instruction contains a constant offset value, and the operand address is computed by adding this offset to the address of the next instruction. Relative addressing is commonly used for branching and control flow instructions.

Importance of Memory addressing modes

Just as choosing the fastest route minimizes travel time, selecting efficient addressing modes speeds up instruction execution.

Just like organizing items on shelves maximizes storage space, efficient addressing modes optimize memory utilization.

Following a well-planned route reduces traffic congestion, just like optimized addressing modes minimize memory access contention.

Using smaller containers reduces storage space, similar to how efficient addressing modes minimize memory requirements.

Complex Instruction Set Computing(CISC) Architecture

CISC was one of the earliest CPU architectures. It has existed since the 1970s, a time when memory usage was very expensive. CISC was designed to reduce the size of a program in memory by embedding low level instructions in a single complex instructions. A good example of this would be the instruction "clean your room". That is a single complex instruction that encompasses simpler instructions like sweeping and putting your clothes away.

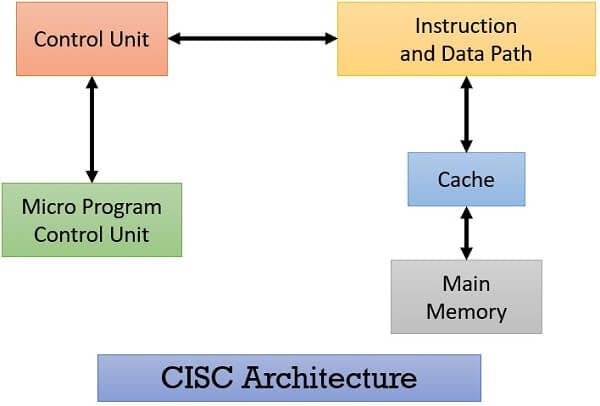

CISC Architecture Diagram

The CISC architecture diagram illustrates the internal structure and components of a Complex Instruction Set Computing (CISC) processor. It provides a visual representation of how instructions are executed and data is processed within the processor. Here are the functions of its components:

Microprogram Control Unit: This unit manages special instructions called microinstructions, which are used to perform complex tasks in the processor.

Control Units: Control units oversee how instructions and data move around inside the processor, making sure everything happens in the right order.

Instruction and Data Path: This shows the pathways that instructions and data take as they move through the processor, kind of like roads on a map.

Cache and Main Memory: Cache is like a fast-access storage area close to the processor, holding frequently used stuff. Main memory is the big storage area where everything else is kept.

Advantages of CISC Architecture

Shorter Code Size: CISC processors have shorter code size, which reduces memory requirements.

Multi-Tasking Instructions: Each instruction in CISC accomplishes multiple low-level tasks, enhancing efficiency.

Flexible Memory Access: Complex addressing modes provide flexible memory access options.

Direct Memory Access: CISC instructions can directly access memory locations, simplifying programming.

Disadvantages of CISC Architecture

Reduced Performance: Despite shorter code size, CISC instructions often require several clock cycles to execute, impacting overall performance.

Complex Pipelining Implementation: Implementing pipelining for CISC instructions is challenging.

Complex Hardware Structure: CISC architectures require more complex hardware to simplify software implementation.

Outdated Memory Optimization: Originally designed to minimize memory usage when memory was expensive, CISC architectures may not fully leverage today's inexpensive and abundant memory resources.

Reduced Instruction Set Computing(RISC) Architecture

RISC (Reduced Instruction Set Computing) processors prioritize simplicity and speed, employing basic instructions that execute quickly and predictably. RISC architectures focus on a load-store style, where data is fetched from memory, processed in the CPU, and then stored back.

A good example for this would be a recipe for a simple sandwich. Each step in the recipe is like a single RISC instruction, such as spreading the mayonnaise or slicing the bread. Each step is straightforward and quick to execute, and by following each instruction in order, you can assemble the sandwich efficiently.

This simplicity allows RISC processors to work well with compilers, optimizing programs for efficiency.

RISC Architecture Diagram

In a RISC architecture, the components work together to efficiently process instructions and manage data. Here's a breakdown of their functions:

Instruction Cache: Think of the instruction cache as a recipe book. It stores frequently used instructions so that the processor can quickly access them, just like grabbing your most-used recipes from a well-organized book.

Hardwired Control Unit: Similar to a conductor leading an orchestra, the hardwired control unit directs the flow of instructions and data within the processor. It ensures that everything follows the right sequence, much like a conductor guiding musicians through a piece of music.

Data Path: The data path is like the kitchen where the actual cooking happens. It processes and manipulates data according to the instructions, much like a kitchen where ingredients are prepared and combined following a recipe.

Data Cache: Imagine a countertop near the cooking area where you keep commonly used ingredients. The data cache stores frequently accessed data, allowing the processor to quickly retrieve it, similar to having frequently used ingredients within easy reach in a kitchen.

Main Memory: Picture a pantry where you store all your ingredients. The main memory holds the entire set of instructions and data needed for the program, akin to a pantry storing all the ingredients for your recipes.

In essence, the RISC architecture ensures that instructions are quickly fetched from the instruction cache, processed in the data path, and that frequently used instructions and data are efficiently stored and retrieved from the caches, analogous to a well-organized kitchen making cooking (processing) faster and more efficient.

Conclusion

Throughout this article, we've explored fundamental elements such as registers, instruction set design, memory addressing modes, CISC, and RISC principles, likening them to everyday scenarios to demystify their complexities.

Understanding computer architecture is not just reserved for hardware engineers or software developers; it's a fundamental pillar that supports innovation across various fields, from security analysis to system optimization. By grasping the intricacies of how hardware and software interact within a computer system, we gain the power to optimize performance, enhance compatibility, and meet the diverse needs of modern computing.

So, whether you're embarking on a journey into computer science or simply seeking to broaden your understanding of technology, thank you for reading this.